Jiawei Fan works for Intel Labs China  as an AI Research Scientist, supervised by Anbang Yao. His research interests span computer vision and model acceleration in deep learning. Previously, he explored knowledge transfer between models, contributing to model compression and training acceleration for omni-scale high-performance artificial intelligence systems.

as an AI Research Scientist, supervised by Anbang Yao. His research interests span computer vision and model acceleration in deep learning. Previously, he explored knowledge transfer between models, contributing to model compression and training acceleration for omni-scale high-performance artificial intelligence systems.

He obtained master degree of computer science at Beijing University of Posts and Telecommunications (BUPT), supervised by Meina Song. Before that, he obtained first-class honorable degree of Bachelor of Science and Engineering. when he graduated from joint program of Beijing University of Posts and Telecommunications (BUPT) and Queen Mary University of London (QMUL).

🔥 News

- 2025.05: A paper on diffusion acceleration framework that could accelerate any type of diffusion model has been accepted to ICML 2025.

- 2024.09: A first-author paper on exploring KD method for transferring the scalability of pre-trained ViTs to various architectures has been accepted to NeurIPS 2024.

- 2023.09: A first-author paper on efficient knowledge distillation for semantic segmentation has been accepted to NeurIPS 2023.

- 2022.12: A first co-author paper on Video SSL has been accepted as a Best Paper Award Honorable Mentioned to ACCV 2022.

- 2022.07: A first-author paper on high-performance parameter-free regularization method for action recognition has been accepted to ACM MM 2022.

- 2022.03: A first-author paper on few-shot learning has been accepted to ICPR 2022.

- 2021.05: Rank 7th in the 3rd Person in Context workshop in CVPR 2021.

📝 Publications

(* Equal contribution, # Corresponding author)

Selected Publications

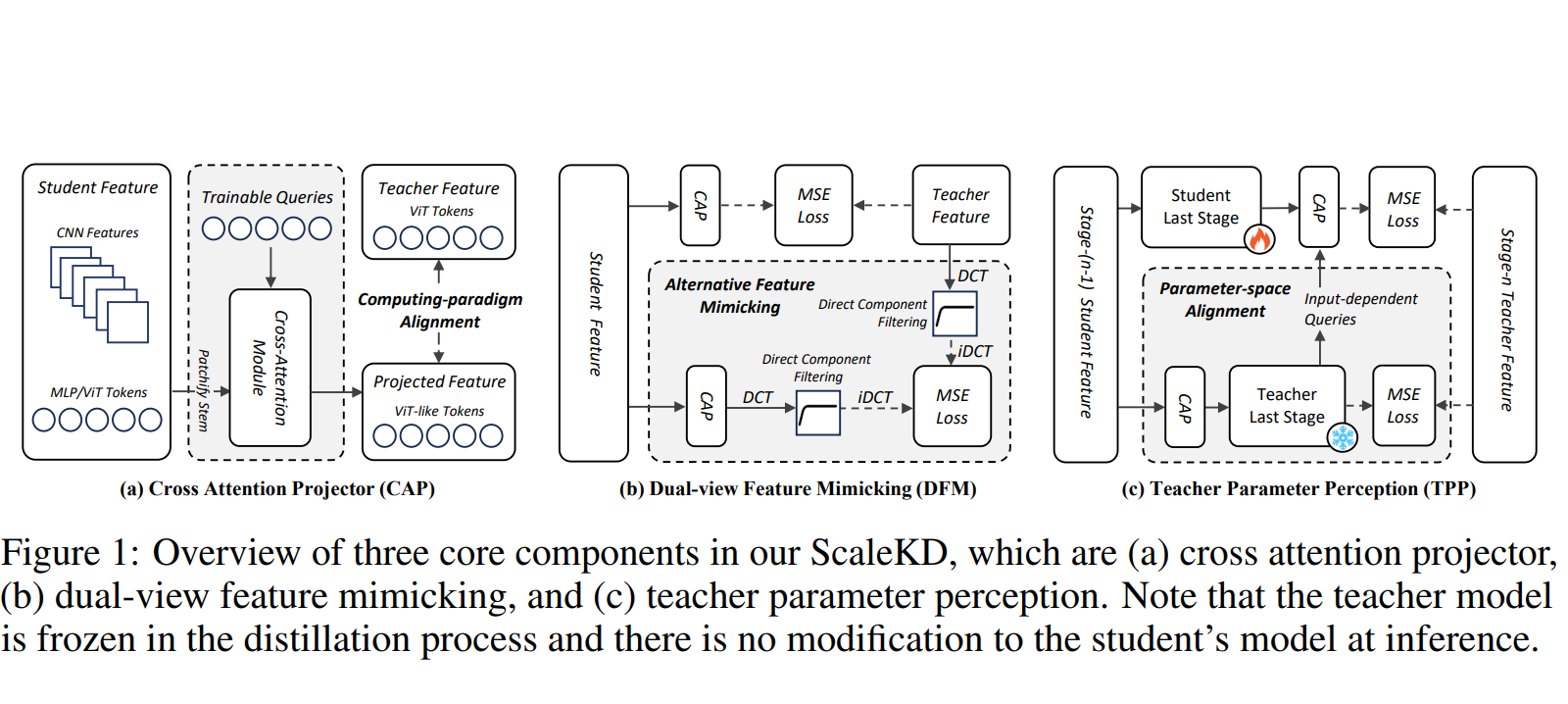

ScaleKD: Strong Vision Transformer Could Be Excellent Teachers

Jiawei Fan, Chao Li, Xiaolong Liu, Anbang Yao#

- It is the first work to explore how to transfer the scalable property of pre-trained large vision transformer models to smaller target models.

- ScaleKD could be used as a more efficient alternative to the time-intensive pre-training paradigm for any target student model on large-scale datasets.

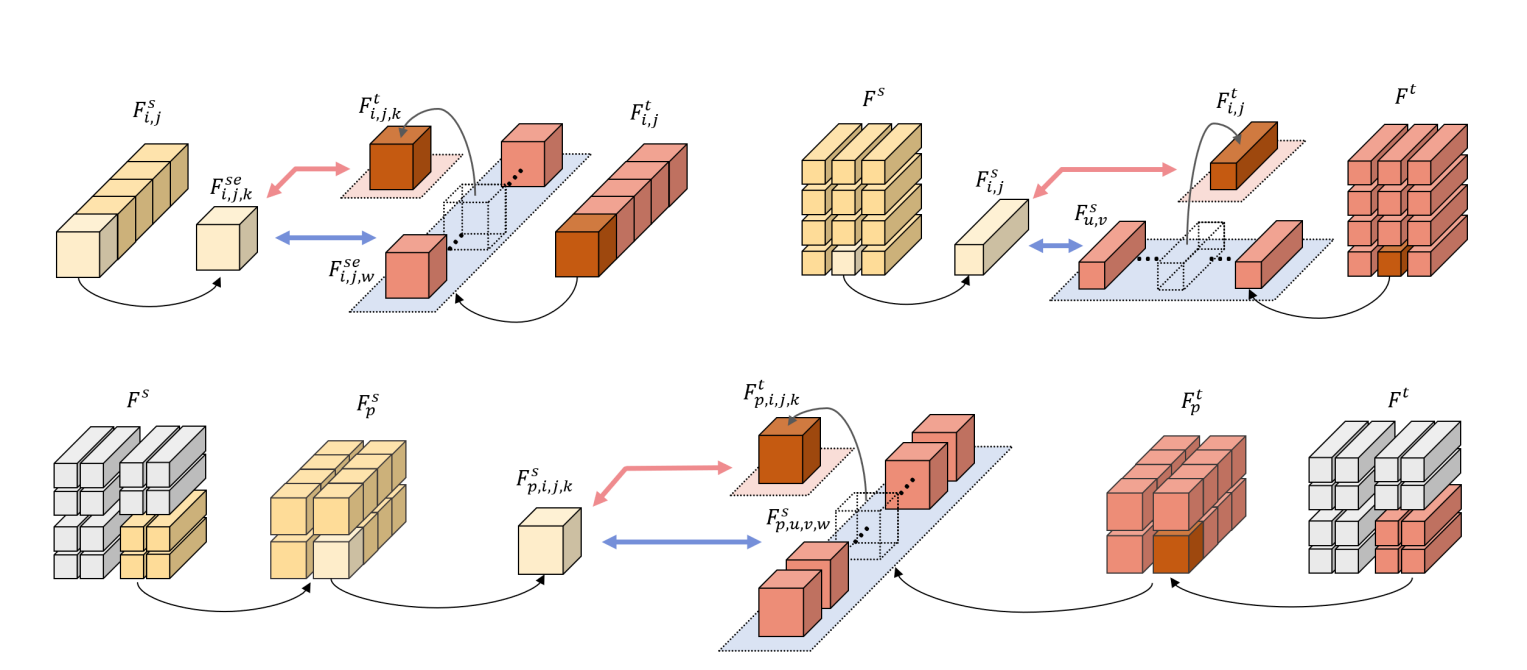

Augmentation-free Dense Contrastive Distillation for Efficient Semantic Segmentation

Jiawei Fan, Chao Li, Xiaolong Liu, Meina Song, Anbang Yao#

- Af-DCD is a new augmentation-free dense contrastive distillation framework for efficient semantic segmentation.

- Morse: Faster Sampling for Accelerating Diffusion Models Universally., Chao Li, Jiawei Fan, Anbang Yao, International Conference on Machine Learning (ICML 2025).

- DTR: An Information Bottleneck Based Regularization Framework for Video Action Recognition, Jiawei Fan*, Yu Zhao*#, Xie Yu, Lihua Ma, Junqi Liu, Fangqiu Yi, Boxun Li, Proceedings of the 30th ACM International Conference on Multimedia (ACM-MM 2022).

- TCVM: Temporal Contrasting Video Montage Framework for Self-supervised Video Representation Learning, Fengrui Tian*, Jiawei Fan*, Xie Yu, Shaoyi Du#, Meina Song, Yu Zhao, Proceedings of the Asian Conference on Computer Vision (ACCV 2022). (Oral and Best Paper Award Honorable Mentioned)

- Episodic Projection Network for Out-of-Distribution Detection in Few-shot Learning., Jiawei Fan, Zhonghong Ou#, Xie Yu, Junwei Yang, Shigeng Wang, Xiaoyang Kang, Hongxing Zhang, Meina Song, 26th International Conference on Pattern Recognition (ICPR 2022).

📖 Educations

- 2020.09 - 2023.06, Master at Beijing University of Posts and Telecommunications.

- 2016.09 - 2020.06, Bachelor at Beijing University of Posts and Telecommunications and Queen Mary University of London.

🎖 Honors and Awards

- 2023.11 Intel Labs China DRA Award.

- 2022.12 Best Paper Honorable Mentioned in ACCV 2022.

- 2022.04 MEGVII Outstanding Interns.

- 2021.10 First Class Scholarship of 2021.

- 2021.05 Win 7th in the 3rd Person in Context workshop in CVPR 2021.

- 2021.01 First Prize in Graduate Innovation and Entrepreneurship Competition.

- 2020.10 First Class Scholarship of 2020.

- 2020.06 BUPT Star of Youth. (10 students per year)

- 2020.02 M Prize in Mathematical Contest in Modeling.

- 2019.10 Tongding Enterprise Scholarship of 2019. (1 student per department)

- 2019.01 First Prize in Graduate Innovation and Entrepreneurship Competition. (as an undergraduate student)

- 2018.10 Hengtong Enterprise Scholarship of 2018.

- 2017.10 National Encouragement Scholarship of 2017.

💬 Academic service

Reviewer: ICML 2025, ICLR 2025, NeurIPS 2024, AISTAT 2025, ACCV 2022.

💻 Experieces

- 2022.07 - now, Intel Labs, China.

Researcher, supervised by Anbang Yao.

Researcher, supervised by Anbang Yao. - 2021.12 - 2022.07, MEGVII Inc, China.

Research Intern, supervised by Yu Zhao.

Research Intern, supervised by Yu Zhao. - 2021.04 - 2021.10, Peking University, China.

Visit Student, supervised by Yang Liu.

Visit Student, supervised by Yang Liu. - 2019.09 - 2019.11, ByteDance Inc, China.

Data Engineer Intern.

Data Engineer Intern.